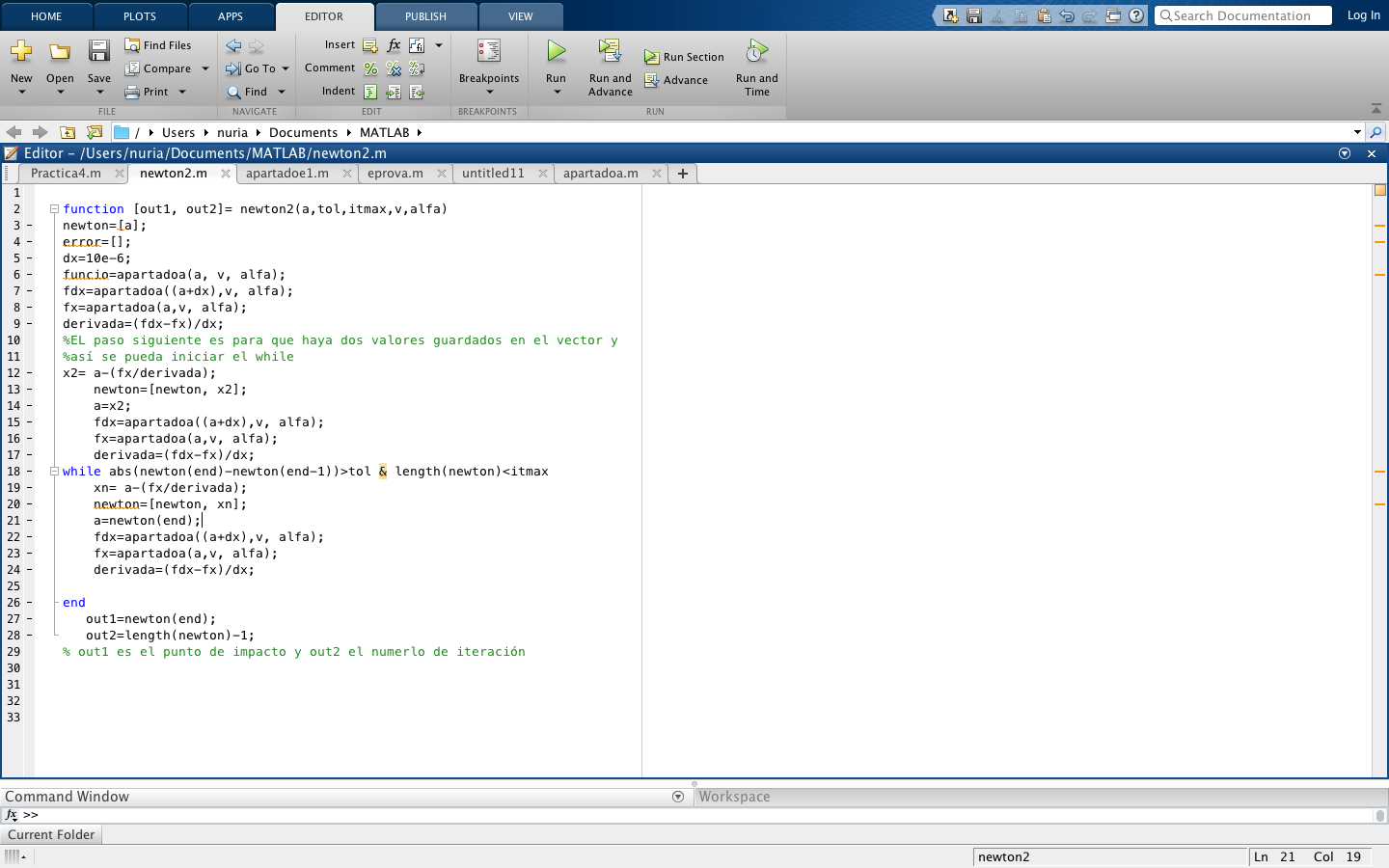

The computation cost with Newton’s method in dealing with a \(10,000 \times 10,000\) matrix tends to be prohibitive. Use gradient descent if you have a very large number of parameters, say 10,000 parameters.Use Newton’s method if you have a small number of parameters (i.e., where the computational cost per iteration is manageable), say 10 - 50 parameters because you’ll converge in \(\sim\) 10 - 15 iterations.If \(\theta\) is 100,000-dimensional, each iteration of Newton’s method would require computing a \(100,000 \times 100,000\) matrix and inverting that, which is very compute intensive.If \(\theta\) is 10-dimensional, this involves inverting a \(10 \times 10\) matrix, which is tractable.

However, in case of very high-dimensional problems, where \(\theta\) is a high-dimensional vector, each iteration/step of Newton’s can, however, be more expensive than one iteration of gradient descent, since it requires finding and inverting an \(n \times n\) Hessian but so long as \(n\) is not too large, it is usually much faster overall. As seen in the section on quadratic convergence, Newton’s method typically enjoys faster convergence than (batch) gradient descent, and requires fewer iterations to get very close to the minimum.Specifically, suppose we have a function \(f: \mathbb\] Let’s assume that this is a function of a one-dimensional/scalar parameter \(\theta\). To get started, let’s consider Newton’s method for finding a zero of a function.However, each iteration tends to be more expensive in this case. Newton’s method is another algorithm for maximizing \(\ell(\theta)\) which allows you to take much bigger steps and thus converge much earlier than gradient ascent, with usually an order of magnitude lesser number of iterations.Gradient ascent is a very effective algorithm, but it takes baby steps at every single iteration and thus needs a lot of iterations to converge.

0 kommentar(er)

0 kommentar(er)